When to Consider Edge Computing in Software Achitecture?

The term “edge computing” is becoming increasingly prevalent as more and more IoT devices, smartphones, tablets, and other devices come online in remote locations. So what is “edge computing” exactly, why is it important to understand its benefits and drawbacks, and when should you consider edge in

software architecture

What is Edge Computing?

Edge computing is the process of analyzing and processing data as close to the source device as possible or at the “edge” of the network. Until recently, the prevailing data transfer model involved moving data from network devices back to a central data warehouse for processing and analysis.

However, as devices come online in places that are very far removed from the central data center or HQ, this practice becomes less tenable due to network latency and bandwidth issues.

How Does Edge Computing Work?

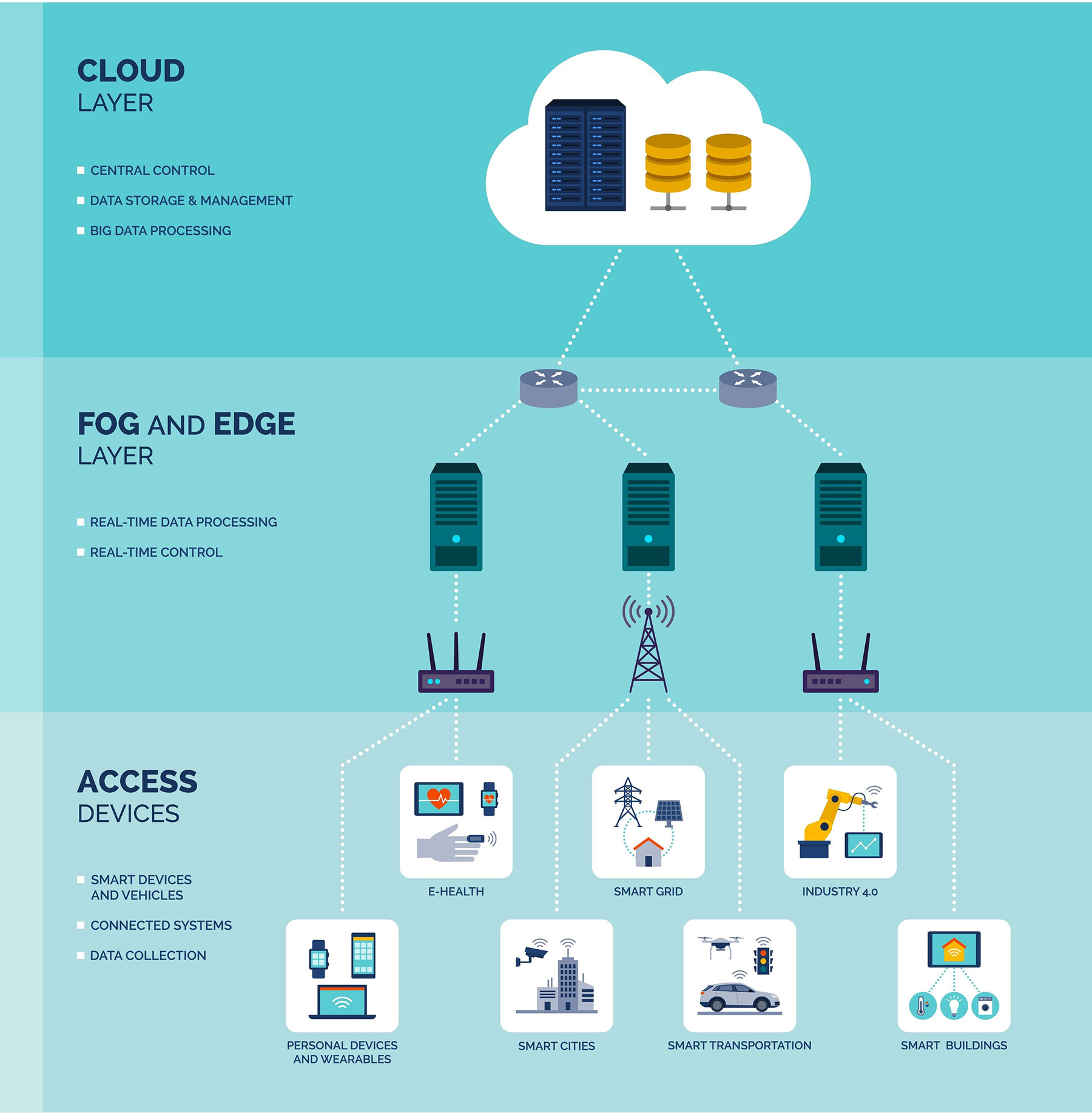

Edge computing moves the bulk of the data processing responsibility closer to the device that needs the data. This might mean utilizing a device’s local storage to hold onto files that would otherwise be sent to a server or using the device itself to manage data analysis. Or it could mean extending the central network by building more hubs in locations closer to places where devices are being used.

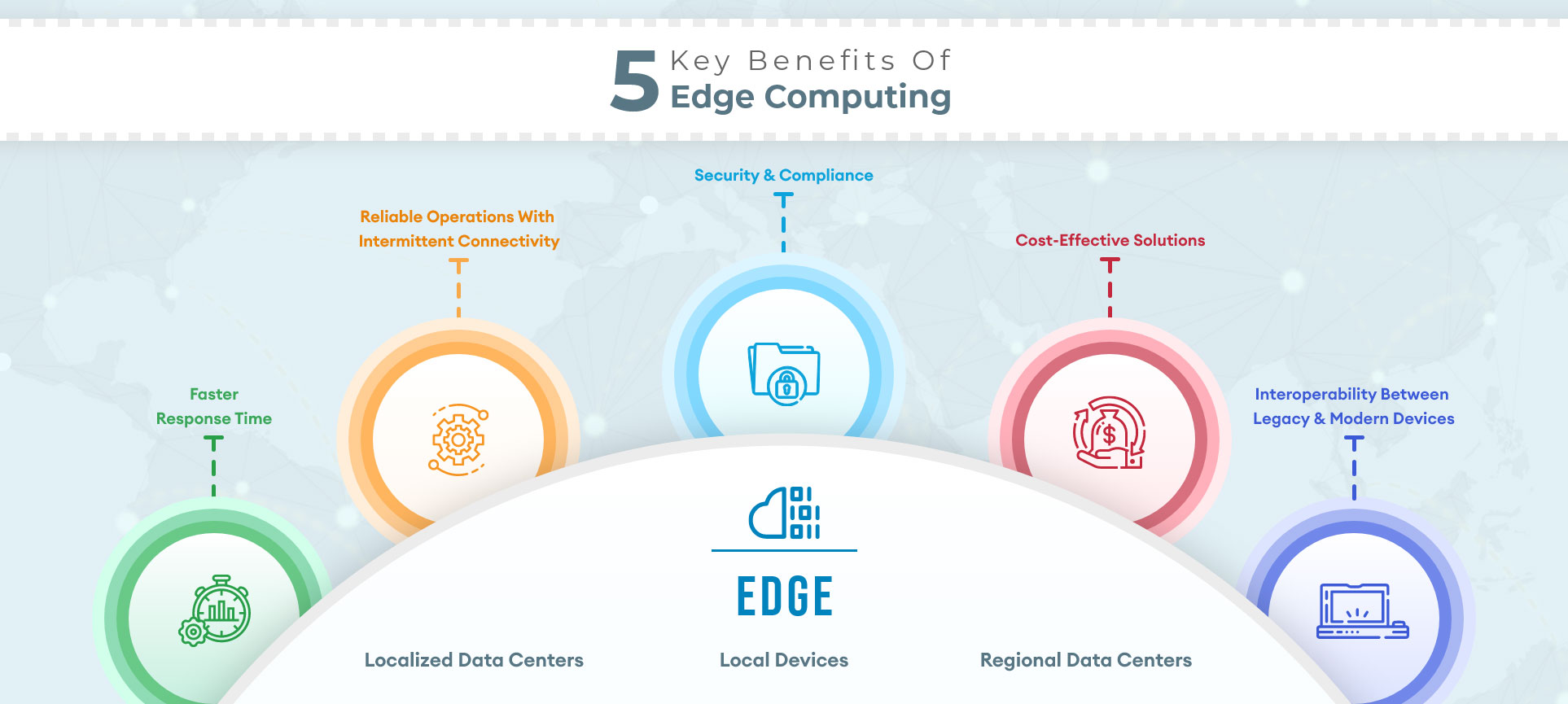

Benefits of Edge Computing

The primary benefit of edge computing is improved response times, as data does not need to travel to a data center for processing. Additional benefits include optimizing bandwidth, as only the most important data is transferred between devices and central data hubs, and a reduced security risk footprint, as less unencrypted data, is sent over the network.

Edge vs Cloud Computing

Cloud computing is the practice of using many remote servers spread across the network to store, analyze, and manage data. Edge computing is not a replacement or competitor for cloud computing rather, companies are beginning to understand how edge computing and cloud computing can coexist.

The best-case scenario will see companies selectively using edge computing for certain kinds of data processing and pushing other tasks to the cloud. For instance, time-sensitive and real-time processing tasks may be performed at the edge, while processes requiring analytics and machine learning algorithms (which run more efficiently in hyper-scale data centers) might stay in the cloud.

What is IoT, and What Are Edge Devices?

IoT, or the “Internet of Things” describes the network of physical objects with sensors, processing abilities, and other technologies that allow them to manage and exchange data with other devices on the network. “Smart” devices are a good example of the “Internet of Things.”

Frequently, these devices need to handle data processing and analysis rather quickly—for example, automated machines on manufacturing floors and in hospitals. It isn’t practical for these devices to continuously send and receive network requests. For that reason, IoT devices are also usually “edge devices.” They manage their own data processing and analysis.

Challenges of Edge Computing

While there are many benefits of edge computing, there are also inherent challenges and risks. Although edge computing reduces the network security risk by requiring fewer unencrypted network requests to be sent, pushing data processing to edge devices does make those devices more vulnerable to attack.

Additionally, the sensors and processors in edge devices must be properly maintained in order for the devices to function. Rather than maintaining a single processing unit in a data center, edge computing requires many units to be maintained at the edge of the network, often in remote locations.

Once you have taken these challenges into consideration, hopefully, you will be ready to incorporate edge computing into your software architecture strategy.